Research

Vision and space

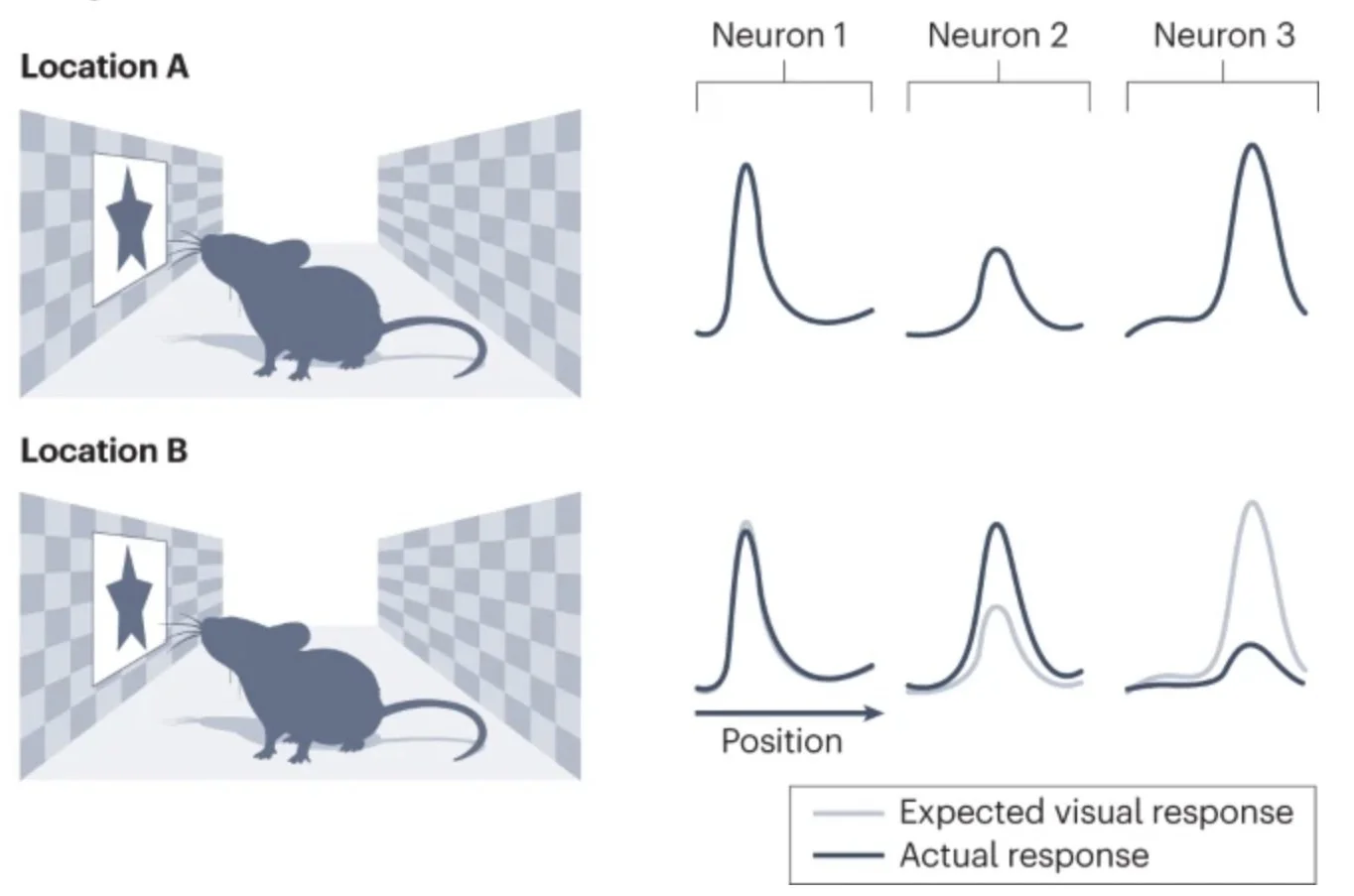

While it has long been known that the brain’s representation of space (place cells) uses visual information, how visual information is transformed from a representation seen by the eyes (egocentric) into representation of the world (allocentric) in the hippocampal formation is as yet unknown.

We investigate the circuits and computations helping interpret of vision for space, and have been discovering the complex interactions between the visual and spatial systems.

Selected related publications:

Saleem, Diamanti, et al, Nature, 2018

Diamanti et al, eLife, 2021

Saleem & Busse, Nature Reviews Neuroscience, 2023

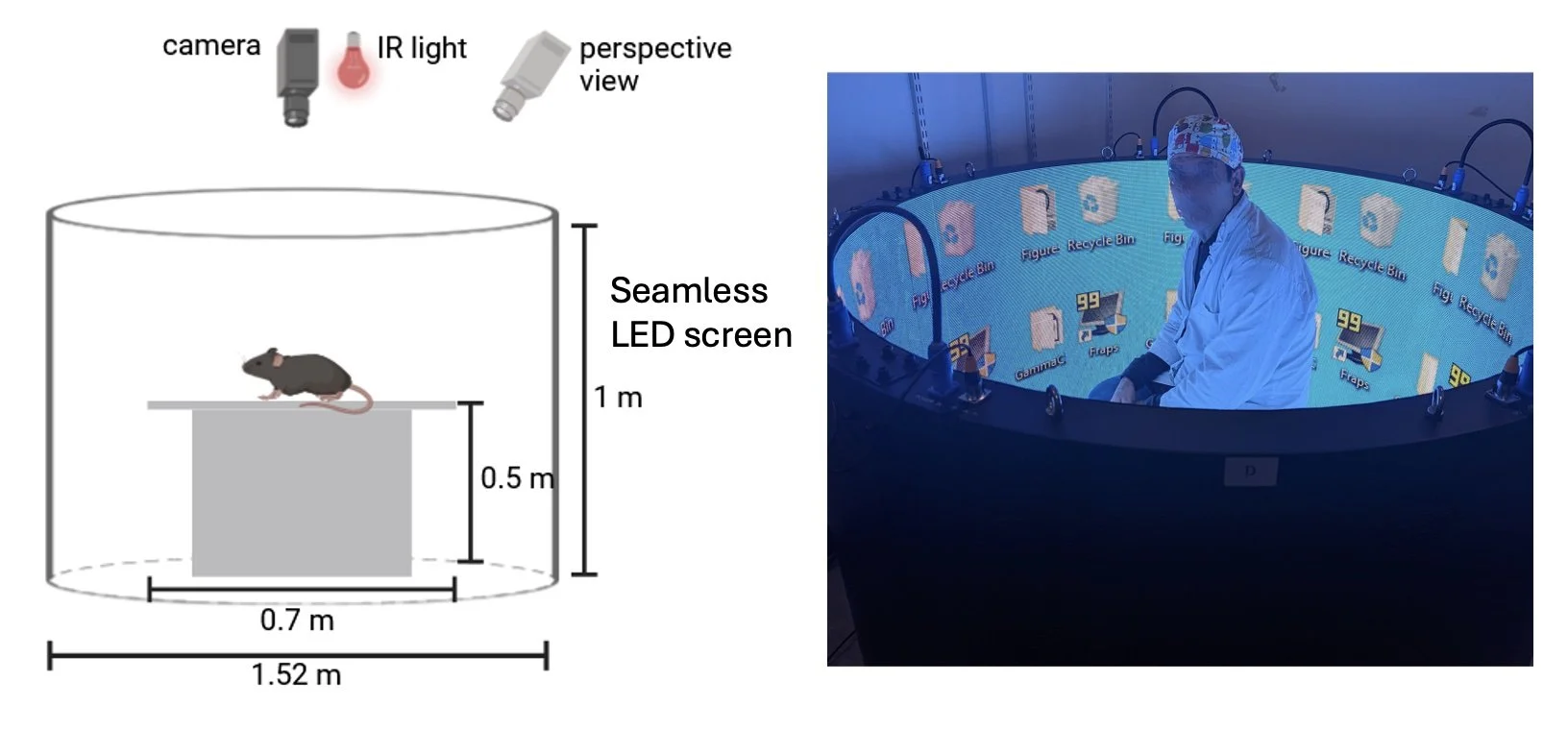

Vision and Head-direction

Animals from insects to mammals are known to be able to orient themselves in an environment using a brain’s compass — the head-direction (HD) system. While the HD system is known to faithfully follow visual cues, how the visual system supports this is not fully understood. Taking advantage of a seamless cylindrical augmented reality environment, and chronic large-scale electrophysiology, we are investigating the circuits and computations enabling the transformation of visual information to head-direction.

Selected related publications:

Saleem & Busse, Nature Reviews Neuroscience, 2023

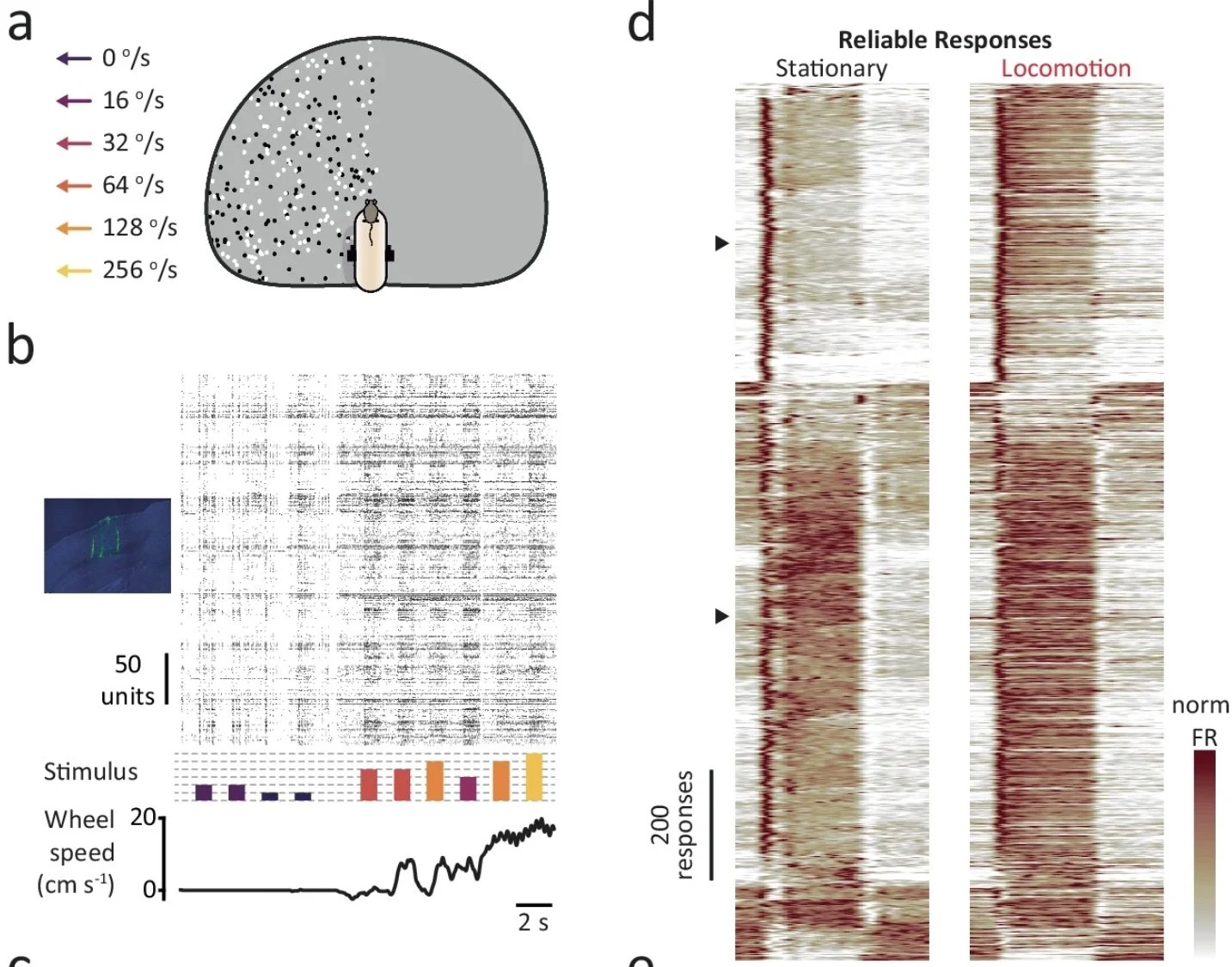

Vision during movement

Vision is one of the most commonly studied areas of the brain. However, much of our understanding of vision is limited to when one is sitting in a fixed spot. We made many discoveries observering how visual processing altered when animals are running over a treadmill. We continue this research and are also now investigating how this is altered when animals are freely to move around the environment.

Selected related publications:

Saleem et al, Nature Neuroscience, 2013

Saleem et al, Neuron, 2017

Muzzu & Saleem, Cell Reports, 2021

Horrocks et al, Nature Communications, 2024

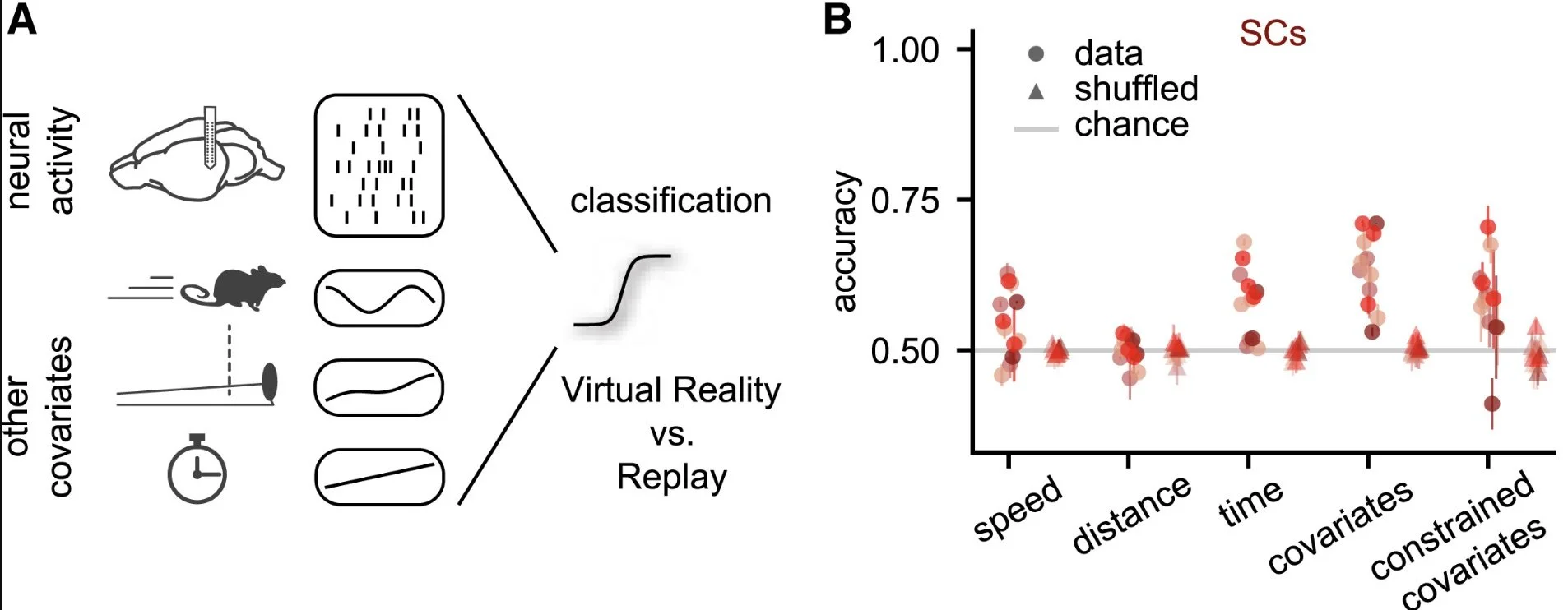

Neural Dynamics & Population coding

With new technologies we are able to record simultaneously from large populations of neurons from single or multiple areas. We study how signals regarding the world are encoded in the temporal dynamics and population codes of large populations of neurons.

Selected related publications:

Saleem, et al, Nature Neuroscience, 2013;

Saleem et al, Nature, 2018

Fournier et al, Current Biology, 2020

Horrocks et al, Nature Communications, 2024

Zucca, Schulz, et al, Current Biology, 2025

Computational Models & NeuroAI

We use computational neuroscience appoarches to better understand neural systems. Many of our studies include phenomenological and other computational models to characterise neural representations, and supervised and unsupervised machine learning approaches to better understand population dynamics.

Selected related publications:

Saleem, et al, Nature Neuroscience, 2013;

Fournier et al, Current Biology, 2020

Zucca, Schulz, et al, Current Biology, 2025

BonVision & Open Science

Open Science: Our lab strongly believes in open and accessible science and we publish our code and data publicly on Github and FigShare.

BonVision: In collaboration with NeuroGEARS Ltd, we developed BonVision, an open-source visual stimulus generator, that is accessible and enables the generation of replicable open- and closed-loop experiments, including virtual and augmented reality environments.

Selected related publications:

Lopes et al, eLife, 2021